High Performance Serialization in C#

Dienstag, 27. März 2018

An endpoint for incoming sensor data which was running on Windows Azure showed high cpu loads. It would have been to early to further scale out and we thought it should be possible to get better resource utilization with a bit of optimization.

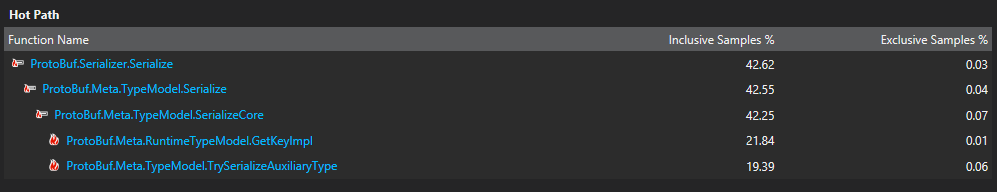

To do this we recorded about 10 minutes of live incoming data into a SQL database, downloaded it into a local SQL Server and wrote a simple console application that was able to process the recorded data exactly the same as the endpoints that showed the high cpu load. A quick run with the performance profiler of Visual Studio 2017 showed a surprising hot path:

The endpoint parses a bit of the incoming sensor data, just enough to put it into the correct queues for further processing, alerting and permanent storage depending on the type of sensor data that was received. The data structure that is needed for further processing needs to be serialized into an array of bytes before it can be put into the queue. And this is where, according to the Visual Studio performance profiler, most of the CPU is used when processing sensor data.

We use ProtoBuf for serialization which is already supposed to be fast and efficient but some research into the current state of serialization for .NET pointed to ZeroFormatter as an alternative to ProtoBuf for our specific use case.

Switching from ProtoBuf to ZeroFormatter was very easy.

Instead of

ProtoBuf.Serializer.Serialize<List<QueuePacket>>(serializedData, measurements);

we now use

ZeroFormatterSerializer.Serialize(serializedData, measurements);

The endpoint parses a bit of the incoming sensor data, just enough to put it into the correct queues for further processing, alerting and permanent storage depending on the type of sensor data that was received. The data structure that is needed for further processing needs to be serialized into an array of bytes before it can be put into the queue. And this is where, according to the Visual Studio performance profiler, most of the CPU is used when processing sensor data.

We use ProtoBuf for serialization which is already supposed to be fast and efficient but some research into the current state of serialization for .NET pointed to ZeroFormatter as an alternative to ProtoBuf for our specific use case.

Switching from ProtoBuf to ZeroFormatter was very easy.

Instead of

ProtoBuf.Serializer.Serialize<List<QueuePacket>>(serializedData, measurements);

we now use

ZeroFormatterSerializer.Serialize(serializedData, measurements);

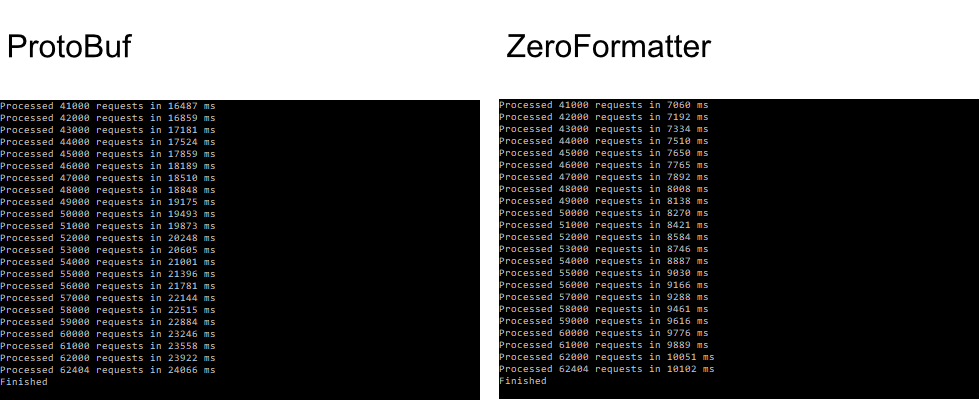

The results where amazing. Our benchmark using ZeroFormatter ran more than twice as fast compared to using ProtoBuf:

Blog / Patrick Dehne